W$W #11: How to be a "Smart'" AI user?

- sharewithjasmine

- Oct 30, 2025

- 8 min read

"People often talk about the importance of finding answers, but I’ve realized the real power lies in asking the right questions. 'Wander & Wonder' is my series about that very journey: from the days of 'wandering' aimlessly through forks in the road and career decisions, to the 'wonder' moment—that instant I dared to ask and start seeking answers to my own inner callings. Welcome to post #11 in the 'Wander & Wonder' series, and let's think through this question together: " How to be a "smart" AI users? " - Jasmine Nguyen

Last weekend, I had the chance to catch up with an ex-colleague from TikTok who is now working at Amazon. We had a fascinating (and sobering) catch up about the jobs market in tech. One thing we all agreed on is that from now until the end of the year, the layoff wave at major tech companies will be pretty brutal, and AI replacing human jobs is a reality that is already unfolding. And then, it happened.

Two days ago, Amazon announced 14,000 job cuts, replacing those roles with AI. This is no longer a distant "discourse"; it's a frightening reality. It makes my earlier observations feel even more urgent.

Or during my summer studies recently, I observed something pretty interesting. All the students from Canada and Poland used ChatGPT for their group work, information seeking, and personal brainstorming. Some even used the results from ChatGPT directly, as if they completely trusted what they received and adopted it as their own opinion—after all, AI is just systems, data, and logic.

In Vietnam, it's a similar story. It was a headache I often encountered in group study: receiving work that was 100% from AI, with no critical feedback or personal refinement.

And that's just school.But now, reality is even more alarming. When the news of 14,000 Amazon employees being replaced by AI broke, the 'discourse' of AI replacing humans became fact. This fear is real. Even when I see a job on LinkedIn and get an assessment of my profile's fit—like " top applicant ", "high matching", or "medium"—I can't help but wonder: Which AI is making this assessment, and what are its criteria?

All of this led me to the urgent question I want to explore with you today in Wander & Wonder #11: 'How to be a smart AI users?'

I've also used AI a lot in my work and life over the past two years, starting with ChatGPT and even Gemini back when it was a Beta version and wasn't yet called Gemini. I'm not asking, "What can AI do?"—the whole world is already seeing proof of its capabilities. Instead, there are two other, more important questions that need our attention:

Who is AI? (Or more accurately, what ideology is behind it?)

What will AI never be able to do?

As we seek answers to these smaller questions, we'll somehow find a perspective for the bigger one.

And the "smart" I'm talking about here, in this context, is really about "survival". It’s about understanding and effectively using AI tools in the present. As for future AI, it will likely be beyond our comprehension, at which point we'll probably be learning how to survive with it more than just "using" it.

Let's talk about who AI is? Is it objective enough?

We often hear about AI models achieving "human-level performance" and responding "similarly to 'humans.'" When you hear this, do you ever think AI is 'objective' because it's a machine, it runs on data, it's not biased? I've had my doubts, but with modern AI (like deep learning) not necessarily operating as a strict formal logic system, but rather on pattern recognition from massive data, it must be objective to some degree. But I always questioned what that degree was.

Until I stumbled upon an analysis from a research group at Harvard titled "Which Humans?", I found it fascinating.

The report shows that the largest AI models (LLMs) are heavily "biased." Not the usual kind, but cultural bias. The study points out that these LLMs are actually outliers compared to large-scale global psychological data. They "ignore the substantial psychological diversity" of the human species.

The scientists call this "WEIRD bias"

WEIRD is an acronym for: Western, Educated, Industrialized, Rich, and Democratic. This is a group of people who are "psychologically peculiar" in a global and historical context. They make up only a small fraction of the world's population but have produced the vast majority of the text data on the internet, causing the AI's training data to be "disproportionately WEIRD-biased".

The result? The authors came to a powerful conclusion: "WEIRD in, WEIRD out".

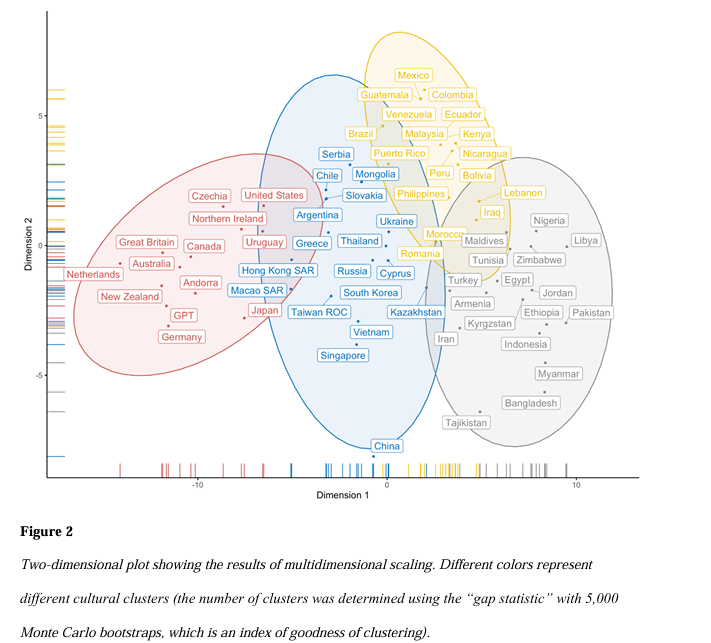

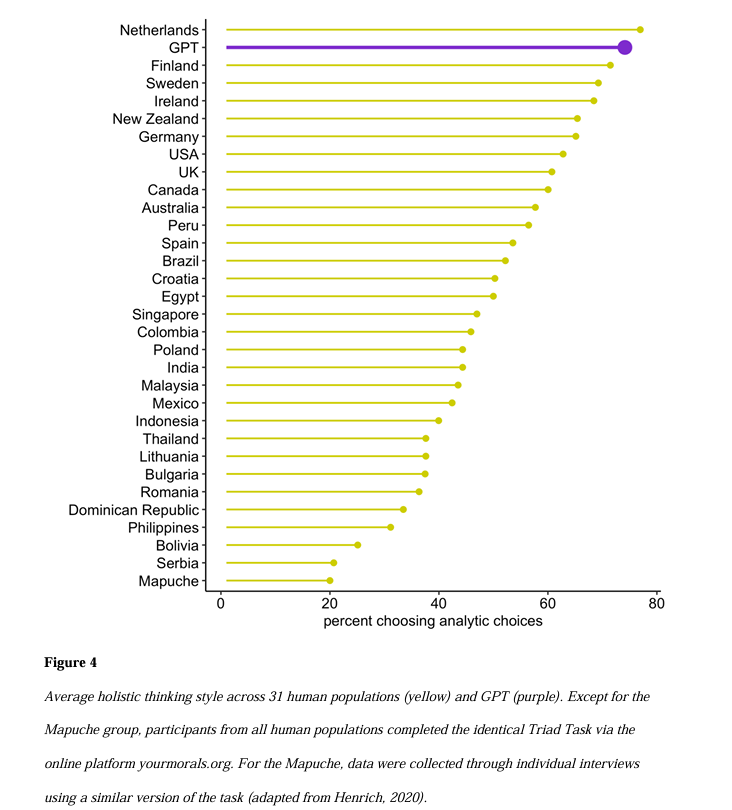

AI isn't "human-like"; it's "WEIRD-like". These models "inherit a WEIRD psychology" and most resemble people from WEIRD societies. For example: When asked about life values (like political attitudes, social values), AI answers like an American. Cluster analysis shows GPT is closest to the United States and Uruguay. When given a thinking-style test, it thinks "analytically" - a hallmark of WEIRD people. Meanwhile, less-WEIRD cultures tend to think "holistically," like in many Asian countries.

This chart shows GPT has an extremely high rate of choosing 'analytic' options, similar to countries like the Netherlands, Finland, and Sweden.

What does this suggest for Marketers & Managers?

If you're a marketer in Vietnam using AI to "understand" customers, isn't there a risk? Is AI "understanding" Vietnamese customers through the lens of a New Yorker? Could we be creating a campaign that champions "individualism" (a WEIRD value) when our market's true insight is "community"?

And if you're a manager using AI to filter resumes, could it (trained on WEIRD data) unintentionally score candidates with "holistic" thinking—a strength in many Asian cultures—lower?

AI, it seems, is a mirror. The problem is, it reflects a very narrow corner of humanity.

So, what about "perfection"?

Honestly, we all know nothing is truly perfect. I'm using 'perfect' here to mean the perception that AI users have when they trust its results as perfect enough to make decisions on their own in some cases.

If the "WEIRD" problem is an "input data" challenge - an extremely complex problem of defining and balancing cultural diversity that even scientists are still struggling to solve - this second question opens a discussion about its inherent limits.

One evening, my husband showed our family a video about Godel's Incompleteness Theorems. It's considered a discovery as monumental as Albert Einstein's Theory of Relativity. It's truly hard to deeply understand this theorem, but to put it simply so you can get the gist, I'll share two main points:

Any logical system (like math, and perhaps AI) that is powerful enough is also incomplete. That means there will always be truths that the system knows are true but cannot prove.

Between "true" and "false," there is a vast middle ground: "the undecidable".

Why is this important?

This got me thinking about AI. Even though modern AI isn't the formal logic system Gödel described, it's based on probability - it still operates on computation. This analogy helps us visualize its limits; it seems to lack a mechanism for this "undecidable" zone.

But what about us? We are wonderful and messy creatures precisely because we live in that "undecidable" zone. We call it intuition, faith, ethics, and culture.

AI can calculate, but it cannot feel. It cannot love. There's an immortal quote from Gödel: "The brain is a computing machine connected with a spirit".

Perhaps, if AI is the "super-computer," humans are the "spirit"? Of course, this is just a personal, philosophical musing for us to think about limits. Applying this theorem to modern AI is just an analogy, not scientific proof.

Speaking of this fragile boundary between "computer" and "spirit," let's shift the mood a bit with "Her" (2013). I first watched this film 12 years ago and re-watched it most recently this September on a flight from Canada back to Vietnam. The feeling of re-watching was indescribable... a lingering sadness from the film's plot, but now mixed with a new dread about an AI-driven future. The movie is a perfect illustration of that very "undecidable" zone—the place where pure logic stops, and love, loneliness, and connection begin.

If we think AI handles the logic, and we humans handle the "spirit" (ethics, creativity, intuition), everything seems simple. Our jobs are so noble!

But then, another question popped up: Am I 'romanticizing' humanity?

We criticize AI for its "WEIRD bias". But what about our own intuition? Is our intuition so "pure"? Or is it also riddled with biases (confirmation bias, in-group bias, implicit biases...)?

We call AI "cold." But isn't it our own uncontrolled "emotions" that have caused countless disastrous decisions in history?

Are we drawing too clean a line: Logic (AI) vs. Emotion (Human)? Perhaps that is the real shortsightedness?

Reality is always messier. The reality is that a biased manager might be using a biased AI tool to make a biased decision.

I'm starting to think that AI's greatest value isn't giving us answers. Its greatest value is forcing us to ask sharper questions.

Instead of asking, "How can I use AI to understand my customers?" maybe the question is, "What is this AI (with its WEIRD mirror) showing me that I don't understand about my own local culture?"

Instead of asking, "How can AI make ethical decisions?" maybe the question is, "How has trying to teach AI ethics exposed the contradictions and incompleteness in our own ethical systems?"

AI isn't a sage we consult. It's more like a critical sparring partner. It's a mirror, one that is both "distorted" and "incomplete," and it gives us another angle to achieve multi-dimensional thinking.

Our job, as marketers and managers, isn't to trust that mirror blindly. It is to:

Recognize its distortions.

Fill in its incomplete parts.

And most importantly, use it to "reflect" on our own biases and incompleteness.

In the end, the sad reality is that AI is replacing some of our jobs, as we've seen. But it isn't here to replace all of us. It's here to force us to become more human—a deeper, more self-aware, and less-biased version of ourselves.

If someone asks me what "using AI smartly" means? In this alarming context, I'm no master with an immediate answer, but for me, it's not just about writing prompts. For me, it's about building a "Human-AI Collaborative Workflow." This is the process I've used to work with AI recently:

Step 1: I always take time away from the computer, alone, to think about my goals and expected outcomes for a specific task I plan to use AI for. This is like thinking about strategy before tactics. It gives me a 'filter.'

Step 2: Use AI as a search and brainstorming tool. This is where we leverage its speed and massive data retrieval.

Step 3: Analyze the AI's output and start filtering ideas and info based on the goals and criteria I set from the beginning. The ability to ask questions is a critical skill when working with AI tools.

Step 4: Create the personal touch, especially the emotional component. I always ask, "Will this resonate with the reader/viewer/participant?" Creativity and empathy live here.

Step 5: Finally, I polish the words, consider the risks, and do the final refinement.

I say "at least for now" because... who knows? Maybe as soon as tomorrow, we'll see AI versions that are more "multicultural." When that happens, we'll probably need to dig even deeper into that timeless question, "Who are we?"... And this week's news proves, more than ever, that people who know how to use AI will replace those who don't (or those who use it ineffectively).

What do you think?

Writing this, I think I'll see you in a future W&W article, because another question just sparked about this so-called "smartness" when using AI: are we experiencing an "illusion of competence"?

Jasmine Nguyễn

Sources of information:

Nagel, E., & Newman, J. R. (2001). Gödel's proof (Rev. ed.). New York University Press. (Original work published 1958)

Atari, M., Xue, M. J., Park, P. S., Blasi, D. E., & Henrich, J. (2023). Which Humans?. Department of Human Evolutionary Biology, Harvard University.

Comments